Structured logging .Net

Structured logging is an approach to logging that captures data in a way that's easy to parse and analyze, unlike traditional logging, which often involves plain text messages. In structured logging, log entries are composed of discrete fields, such as time stamps, log levels, messages, and other contextual information.

Here is an example:

_logger.LogInformation("Request received {@Request}", request);

Why it is important? Well there are few main reasons.

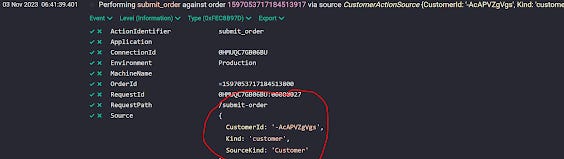

Searchability and Filtering: Structured logs allow for easier searching and filtering since each part of the log message is a distinct field. This makes it straightforward to find all logs related to a specific event, error code, or user action. As you can see in the following example, logs are written in a json format where you can easily search.

Performance: When you use structured logging with deferred execution, such as with Serilog or NLog, the actual string interpolation doesn't happen unless the log level is enabled. This means you avoid unnecessary string manipulation if, for example, your debug logs are turned off in a production environment.

Why you shouldn't use logging with string interpolation?

Utilizing string interpolation in logging compromises the capability to efficiently search or filter through log entries. Additionally, it necessitates memory allocation for each log entry created, due to the fact that, unlike structured logging, the template is not established at compile-time, resulting in potentially higher overhead and less optimal performance.

_logger.LogInformation($"Request received {JsonSerializer.Serialize(request)}");

In conclusion, it is imperative to exercise discretion when implementing structured logging, particularly to mitigate the risks of inadvertently logging sensitive information or excessively large objects. Developers have the capacity to introduce entire objects into the log data, which could lead to breaches of privacy, security concerns, and potential performance issues due to the increased size and complexity of the log data. Therefore, it is essential to establish and adhere to best practices regarding what data should be logged, ensuring that the logs remain informative and valuable without compromising security or performance.